In our family, we’re preparing for our first teenage driver later this summer. Honestly, I’m excited about it — a significant portion of our afternoons and weekends is driving kids to and from their various activities, so having another driver in the house will be a relief.

But going through the process of making sure we have the right number of vehicles, that Ben learns the basics in preparation for driver’s ed, and thinking through with the kids what this new level of autonomy will mean, gives you a fresh perspective on our vehicle-based society. Especially where we live, things are very spread out, so no car means being cut off — and for a teenager, having a car changes everything.

As we prepared for this, we made the decision to look for a low-cost electric vehicle — no easy feat in this day and age. What we found turned out to be a perfect fit — especially as gas prices started to climb. Our little i3 can do 3-4 round trips into town for kid’s activities, or one round trip airport run, on a single charge. The American mid-west has very little charging infrastructure, but installing the necessary equipment at home was way cheaper and easier than you’d think. This arrangement changes how you think about travel. While on one-hand, you can’t easily extend a trip by “filling up” at a station, on the other, there’s remarkable freedom in not having to use gas stations at all. The other day I realized my combustion-engine-equipped Saab was almost out of gas, and was disappointed to remember that I’d have to drive it somewhere to fix that problem; guzzling gas makes you dependent on someone else. The fact that it costs $70 to fill that tank right now is shocking — especially for teenagers dreaming about freedom.

Of course, there are various nuances to this…

– We’re dependent on someone else for our electricity as well — but the remedy is a fun future project to install some solar panels. I have a couple friends embarking on multi-month efforts to power their entire home with renewables; I’ll start with learning how to charge the car. Since its equipped with DC-direct ports, I won’t need an inverter, which should save some capital. But that project is strictly for the fun and challenge of it — the impact to our electricity bill from charging our car has been negligible; it barely even registers.

– Electric car batteries aren’t great for the environment. Building them requires mining for rare earth minerals, and taking them apart at the end of their life is difficult. But progress is being made at building new types of batteries that are more environmentally friendly, and improvements are being made to recycling. One great use I’ve seen for old EV batteries recently is as a back-up for home solar systems.

– Our electric car isn’t fully electric. It has a built-in backup generator called a range extender (Rex). The Rex isn’t connected to the drive train, so its not technically considered a hybrid vehicle. In the US, it was intended to kick-in when the battery charge is low (around 7%), and charge the battery directly. In Europe, the Rex could hold the state of charge at any level below 75%, so of course I reprogrammed the car’s computer to think its European! Since the car is from 2015, some of the electronics need work, and we often need a phone handy to clear error codes to get the Rex to kick-in, but having it available creates confidence that we’re not going to face a dead battery in the middle of a country road somewhere. Filling the generator’s tank costs about $6 once every month or two.

For now, we’ll have three cars. Two with combustion engines, and the electric. The electric car gets the most use, the SUV is for big hauls or long trips, and I’m trading down my Saab for one that’s more appropriate for the kids to learn on. Some day, I’d love to go all electric. It’ll take time to phase out gasoline vehicles: prices need to go down, ranges need to be longer, and infrastructure needs to be built out. But both our governments (US and Canada) have set ambitious goals for the transition, and after a few months with an electric car I’m convinced, the future of transportation should be electric because of one main reason: energy diversification.

A traditional combustion engine has one energy source, and individuals and communities can’t make it ourselves. Bio-fuel was a false lead; its worse for the environment than oil. Electricity can be made from dirty sources, like oil and coal — and we’ll probably need those sources for longer than we’d like — but it can also be made from wind, or water or sun or the heat of the earth, and some of those things can be harnessed at home, or in smaller regional projects. Diversifying our energy sources gives us options, and allows us to respond faster when we learn new things, or face new challenges. There’s no better illustration of this than sailing past a gas station, smiling as those suckers pump their hard earned cash into their tanks…

cheap, or terribly different looking, but its a good effort at making a repairable handset. The

cheap, or terribly different looking, but its a good effort at making a repairable handset. The

One of the first times I heard about Linux was during my freshman year at college. The first-year curriculum for Computer Programmer/Analyst was not particularly challenging — they used it as a level-set year, so assumed everyone was new to computers and programming. I’d been trying to teach myself programming since I was 11, and I was convinced I knew everything (a conviction solidified when one of the profs asked me to help him update his curriculum.) I don’t even remember what class it was for, but we were each to present some technology, and this kid, who looked to be straight out of the movie Hackers, got up and preached a sermon about a new OS that was going to change the world: Linux. Now there was something I didn’t know…

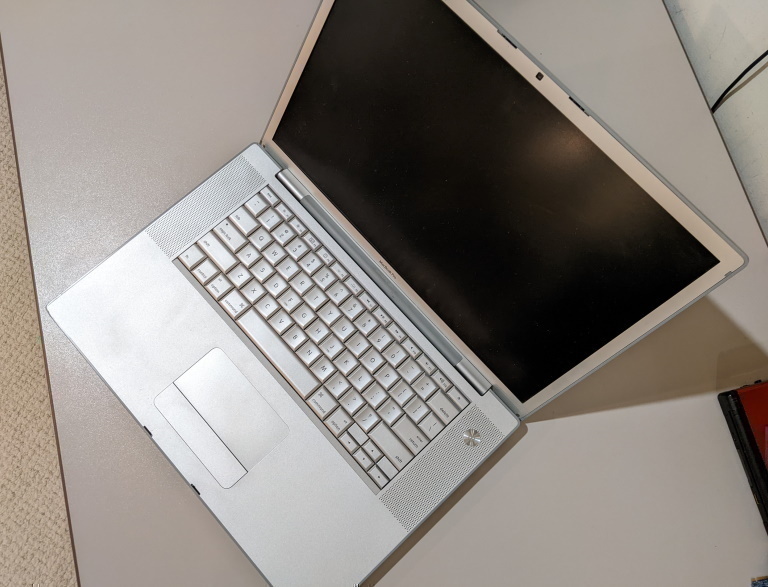

One of the first times I heard about Linux was during my freshman year at college. The first-year curriculum for Computer Programmer/Analyst was not particularly challenging — they used it as a level-set year, so assumed everyone was new to computers and programming. I’d been trying to teach myself programming since I was 11, and I was convinced I knew everything (a conviction solidified when one of the profs asked me to help him update his curriculum.) I don’t even remember what class it was for, but we were each to present some technology, and this kid, who looked to be straight out of the movie Hackers, got up and preached a sermon about a new OS that was going to change the world: Linux. Now there was something I didn’t know… It was around the same time that the “second coming” of Steve Jobs was occurring, and the battered Mac community was excited about their salvation. The iMac wowed everyone, and OS X provided the real possibility that Macintosh might survive to see the 21st century after all. I went irresponsibly into debt buying myself an iMac SE — an offering to the fruit company that would re-occur with almost every generation of Apple technology. I sold all my toys and stood in line for the first iPhone. I bent over backward to get someone to buy me the first MacBook Pro with a 64-bit Intel processor. My first Mac ever was rescued from a dumpster, and I’ve saved many more since.

It was around the same time that the “second coming” of Steve Jobs was occurring, and the battered Mac community was excited about their salvation. The iMac wowed everyone, and OS X provided the real possibility that Macintosh might survive to see the 21st century after all. I went irresponsibly into debt buying myself an iMac SE — an offering to the fruit company that would re-occur with almost every generation of Apple technology. I sold all my toys and stood in line for the first iPhone. I bent over backward to get someone to buy me the first MacBook Pro with a 64-bit Intel processor. My first Mac ever was rescued from a dumpster, and I’ve saved many more since.

You can do it now, although the output is significantly less meaningful or helpful on the modern web. Dig around your Browser’s menus and it’s still there somewhere: the opportunity to examine the source code of this, and other websites.

You can do it now, although the output is significantly less meaningful or helpful on the modern web. Dig around your Browser’s menus and it’s still there somewhere: the opportunity to examine the source code of this, and other websites.

The swan song of the Apple II platform was called the IIGS (the letters stood for Graphics and Sound), which supported most of the original Apple II software line-up, but introduced a GUI based on the Macintosh OS — except in color! The GS was packed with typical Wozniak wizardry, relying less on brute computing power (since Jobs insisted its clock speed be limited to avoid competing with the Macintosh) and more on clever tricks to coax almost magical capabilities out of the hardware (just try using a GS without its companion monitor, and you’ll see how much finesse went into making the hardware shine!)

The swan song of the Apple II platform was called the IIGS (the letters stood for Graphics and Sound), which supported most of the original Apple II software line-up, but introduced a GUI based on the Macintosh OS — except in color! The GS was packed with typical Wozniak wizardry, relying less on brute computing power (since Jobs insisted its clock speed be limited to avoid competing with the Macintosh) and more on clever tricks to coax almost magical capabilities out of the hardware (just try using a GS without its companion monitor, and you’ll see how much finesse went into making the hardware shine!)